We’ve all been using AI for a while (hello Siri, Alexa, and Google Assistant!) but it seems like generative AI is all anyone can talk about these days.

It’s easy to see why. Artificial intelligence can now spit out an entire article based on just a few prompts (looking at you, ChatGPT). AI image generators like Midjourney, DALL-E 3 and Stable Diffusion can produce illustrations, photos, and other graphics.

There’s no doubt that these tools can be incredibly helpful. But are AI art generators ethical? And if those waters are murky, what’s the best way to navigate them?

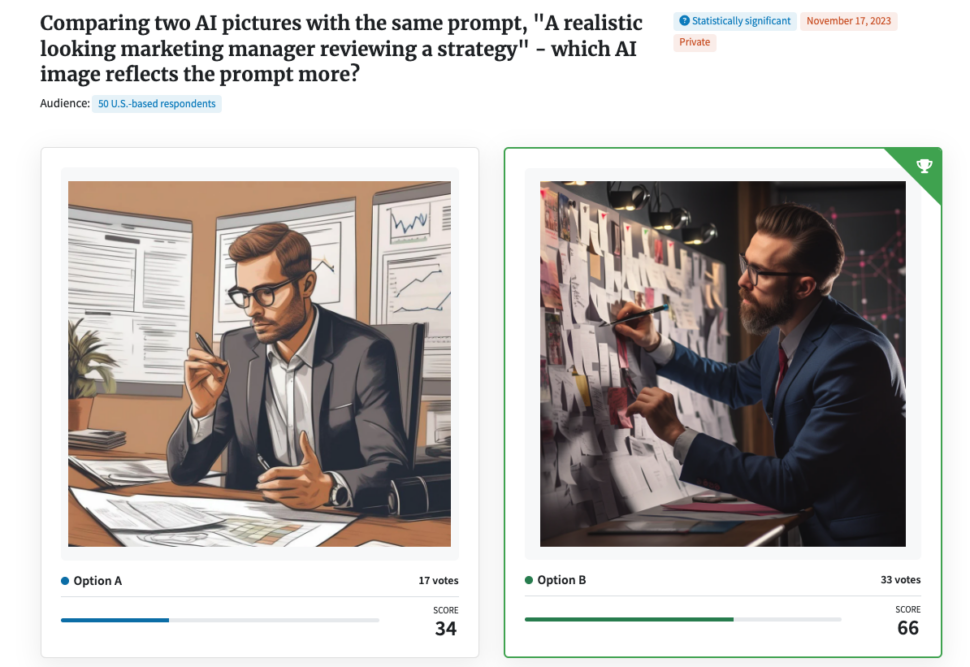

We’ll explore these questions and compare some of the most popular AI art generators. We ran a 50-person PickFu poll to see whether Midjourney or Stable Diffusion produced an image our audience liked.

The winner didn’t surprise us, but the feedback did.

You’ll get to see those results for yourself. But first, read on to see what generative AI is and how we can use it ethically.

A quick introduction to generative AI

The field of generative AI is new, complex, and rapidly changing. So we’ll stick to a basic definition.

Generative AI works like this: the AI model scrapes, or is “trained” on, vast amounts of data. When prompted, it puts together different combinations of the data to generate an output.

Let’s say you want Midjourney AI to create a photorealistic image of a dog bounding through the Scottish highlands. You enter the request into the system, and it takes all of the data it has on those keywords to come up with an image.

While some tech companies will claim their generative AI tool creates new or original work, others disagree.

AI software doesn’t actually come up with its own work, after all. It is not human. Instead, it gets all its knowledge from the images, text, audio recordings, and other data it scraped from across the web.

Some tech companies use internal data sources to train their AI models. But most slurp up everything from Flickr and Instagram posts to paywalled articles and pirated books. Nothing appears to be safe from the crawl – not even private medical records.

Think of it this way: if there wasn’t already a huge internet database full of photos, paintings, illustrations, and words, generative AI would have nothing to combine. Machine learning is, at its core, dependent on humans.

With few laws and regulations in place to govern the use of generative AI, it takes what it wants. And its developers don’t pay a cent to the people whose work they’re ingesting into the AI models. All it takes is a quick look into social media sites to discover the complicated feelings people have around AI.

This is just one piece of the complicated ethics surrounding generative AI. There’s a whole lot more – enough so that the United Nations Educational, Scientific and Cultural Organization (UNESCO) has issued extensive recommendations for the ethical use of AI.

In other words, it’s so important to use these tools with care.

How to use AI image generators ethically

If you’re using AI art as more than just a fun activity for a lazy Sunday, follow these guidelines for ethical use:

- Think twice before using AI art for profit. It’s tempting to use AI to make a quick buck. But it’s worth stopping to think twice about the ethics of it. Will you use the generated images exactly as the AI art generator produced them? Then you’re at risk of putting out material that’s uncannily close to something that’s already out there. You’re better off using generated images as a launching pad to create something that’s more unique.

- Be transparent about your use of AI. Many mixed media artists and graphic designers tell their audience about the materials or software they use to create art. If you’re using AI as a material in your illustrations, book covers, or advertisements, say so. Better yet, directly acknowledge the artists whose work helped produce your AI images.

- Use generative AI for inspiration, not the end product. Many content creators use tools like ChatGPT to create outlines or move through writer’s block. But instead of having AI write the whole piece for them, they use the inspiration to create original work. You can do the same with AI art. Go beyond minor changes. Aim for full customization of the generated Stable Diffusion or Midjourney image.

With very few regulations in place to curb generative AI, it’s up to us to put those guardrails up. Artists are fighting back with tools that poison pixels of their art so that they’re harder for AI to scrape. It’s safe to say most artists aren’t happy with the way AI is compromising their livelihoods and the work they’ve done so far.

With all of that said – if you’re looking to use generative AI to inspire unique images for your marketing and creative assets, let’s explore some of the tools out there.

What is the best AI image generator?

Midjourney and Stable Diffusion are widely considered some of the best AI image generators around. Both use a diffusion model and deep learning to produce images based on text prompts.

So what does that mean?

Essentially, diffusion models are trained in a specific way. They take an image and add noise to it. The diffusion model then de-noises the image, which trains it to understand how to “re-create” the original.

Because a few changes end up getting left behind by the noise, the picture is not an exact copy of the original. But it’s close.

The diffusion model then uses random seeds (numbers that prompt the de-noising process) to generate different images. Or, you can enter a seed yourself and add prompts to it to generate various versions of the same AI image.

Developed and released by Stability AI, Stable Diffusion is an open-source diffusion model. This means it’s free to use as long as your computer has enough memory on its graphics processing card (GPU). Or, you can use it with a third-party platform like DreamStudio.

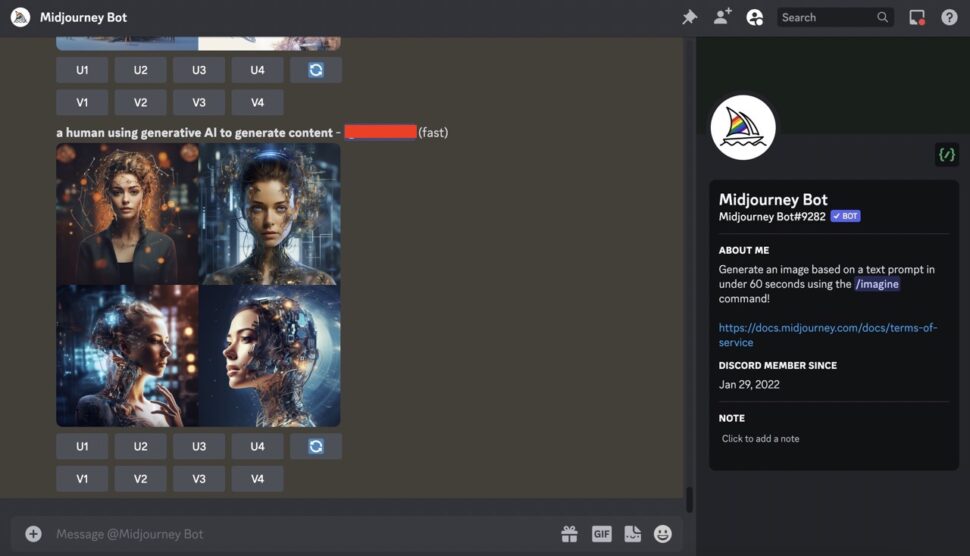

Midjourney, on the other hand, requires a paid subscription. You can also only access Midjourney through Discord – so it’s a little complicated.

Let’s take a look at how Midjourney and Stable Diffusion stack up.

Stable Diffusion vs Midjourney: A comparison

When it comes to ease of use, Midjourney wins – even though accessing it is a little bit of a maze. Once you’re on Discord and have paid for a Midjourney membership, producing images is easy.

But Stable Diffusion comes with a steeper learning curve. It has a lot more customization options than Midjourney. While both models use image prompts to generate art, Stable Diffusion takes it a step further.

With Stable Diffusion, you can use inpainting to fill in parts of missing or damaged images. If you want the image to expand beyond its borders, you can do that with outpainting. Stable Diffusion also lets you input a seed value and adjust the size of an image.

Midjourney is still in development and has some similar functions, but they’re not quite at the Stable Diffusion level yet. Some users say that Midjourney is more likely to produce high-quality images than Stable Diffusion because it has fewer customization options.

We ran a PickFu poll to see what our respondents had to say. First, ran the same prompt through Midjourney and Stable Diffusion: “A realistic looking marketing manager reviewing a strategy.”

Here’s what we got. Stable Diffusion’s image is on the left, and Midjourney’s is on the right. We asked a pool of 50 U.S.-based respondents which image fit the prompt best.

We weren’t surprised when Midjourney’s more realistic image won.

But we were intrigued by what our respondents had to say in the written feedback portion of the poll. They definitely found Option B more realistic, but Option A came with undeniable strengths too.

We’ll let the feedback for Option A (Stable Diffusion) and Option B (Midjourney) speak for itself:

- “While I like the [Obtion B] image a lot more for stylistic reasons, I’d have to say that [Option A] definitely fits my expectation of a modern manager at work a lot more. Desks and whiteboards, not corkboard collages like something out of the 50s.”

- “If by realistic you mean photorealistic, then it’s definitely Option B. Everything about this image looks less cartoonish and more detailed.”

- “Both of them are only about 1/2 way there. I think Option A captures the scene of a marketing manager much better, with the well-lit office environment and business charts, but it is in an illustration style not ‘realistic.’ Option B is much more realistic in style, but the setting doesn’t look like a business office.”

In short, respondents felt that Option A had a more realistic setting. Option B, on the other hand, was more photorealistic. So let’s say that we needed an image that combined these two traits. We could take Option B and refine the image to reflect a more business-office-like setting on Midjourney.

Or, we could use the more customization-friendly Photoshop AI editing tool to create a more unique image that meets all the right criteria.

Midjourney vs Stable Diffusion: which should you ultimately choose?

You should choose the AI image generator that best fits your needs. Try playing around with multiple AI art tools. Consider trying DALL-E vs. Midjourney vs. Stable Diffusion XL to get the optimal image you require!

Once you have a set of AI-generated images, you can test them on a customer insights platform like PickFu. If you run an Open-Ended poll, your PickFu target audience can give you suggestions for improving and customizing the image for your needs.

FAQs

What’s better: Midjourney, Stable Diffusion, or DALL-E 3?

The best image generator depends on what you’re using it for. Midjourney can produce lush, vivid images that resemble paintings or photos. DALL-E 3 lends a more cartoon-like angle, but it can make things look photorealistic too. Stable Diffusion offers the most customization – as long as you know how to use it. You can try pitting DALL-E 3 vs. Stable Diffusion or DALL-E 3 vs. Midjourney against each other and see which one gives you the most inspiration.

Is Midjourney better than Stable Diffusion XL?

It depends on your preference, but most users say the two tools are equally good at producing AI images. Since Midjourney isn’t open-source, some people might find it more user-friendly than Stable Diffusion XL. As you play around with different prompts and customization options, you’ll learn which tool you like best.

What are the disadvantages of Midjourney?

Midjourney can only be accessed through a Discord bot, which makes it a little confusing to use. And while it’s an impressive tool, Midjourney is still in development and costs a monthly fee to use. It doesn’t come with features like upscaling, either. Plus, Midjourney can only be used if you have an internet connection, whereas Stable Diffusion works locally.

Where can I use DALLE-3?

If you want easy access too DALLE-3, you can go to bing.com/images which is completely ran on DALLE. You can practice your prompts (negative prompts as well), to refine your images.